This blog post was published on Hortonworks.com before the merger with Cloudera. Some links, resources, or references may no longer be accurate.

Guest Blog by Sahithi Gunna, Senior Solutions Engineer, BlueData

Running unmodified open source distributed computing frameworks on Docker containers has long been one of BlueData’s core value propositions.

With that in mind, Hortonworks was one of BlueData’s first partners in the Apache Hadoop and Big Data ecosystem; the BlueData EPIC software platform was first certified for the Hortonworks Data Platform (HDP) back in 2014. And since then, we added support for related HDP services and products such as Ambari, Atlas, Ranger, and more.

With BlueData EPIC, our customers have gained greater agility, flexibility, and cost savings; they can deliver faster time-to-insights, with the ability to easily deploy multiple versions of HDP in multi-tenant containerized environments. Their data science teams and analysts can spin up on-demand HDP compute clusters for Big Data analytics in just a few mouse clicks – providing instant access to a new containerized HDP environment with enterprise-grade security, data governance, and more.

As part of our ongoing partnership with Hortonworks, we are excited to announce a new deeper level of certification and collaboration: our BlueData EPIC software is now QATS (Quality Assured Testing Suite) certified.

What is the Hortonworks QATS Program?

The QATS program is Hortonworks’ highest certification level, with rigorous testing across the full breadth of HDP services. It focuses on testing all the services running with HDP, and it validates the features and functions of the HDP cluster.

QATS is a product integration certification program designed to rigorously test Software, File System, Next-Gen Hardware and Containers with Hortonworks Data Platform (HDP). With dedicated Hortonworks engineering resources to continuously and thoroughly test each release of HDP, QATS ensures that solutions are validated for a comprehensive suite of use cases and deliver high performance, under rigorous loads.

This new certification enables Hortonworks and BlueData to provide best-in-class support to our customers. Our teams will continue to collaborate at various levels – across engineering R&D as well as our go-to-market plans – to ensure continuous product compatibility and high-quality customer service. And we will continue to run QATS tests against future product versions.

QATS Test Coverage

The Hortonworks QATS certification covers an extensive range of tests including:

- Functional tests of HDP clusters running on BlueData EPIC (covering system components including Docker containers, operating system, storage, and networking)

- Functional testing of all HDP components including Hive, Hive with LLAP, HBase, and Spark with Kerberos security

- Functional testing of policies for data at rest encryption, wire encryption, and more

- Integration tests to ensure that all services are configured, integrated, and are working well with each other

- Stress testing of different components that are used frequently

- Performance testing and validation

- Concurrency and multi-tenancy testing of Hive, Spark, HBase, and other highly utilized services

- Operational readiness testing to ensure alerts, monitoring, auditing, and more

QATS Certification Process

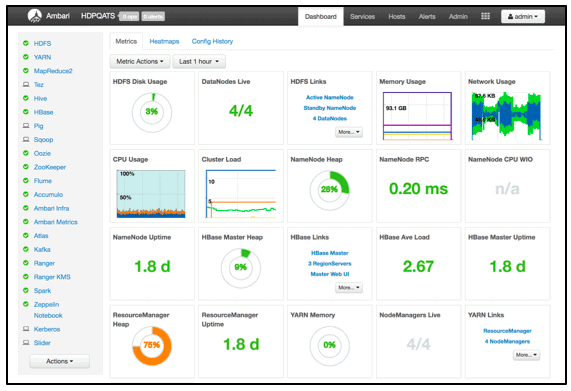

All the QATS tests were executed on a HDP cluster, running in Docker containers on the BlueData EPIC software platform. The tests were extensive and rigorous, with enterprise-grade functionality such as Kerberos security and high availability (HA) enabled. The services tested include HDFS, YARN, Hive, HBase, Spark, Ranger, Atlas, and more – as shown in the list in the far left side of the Ambari screenshot below.

HDP Cluster Environment

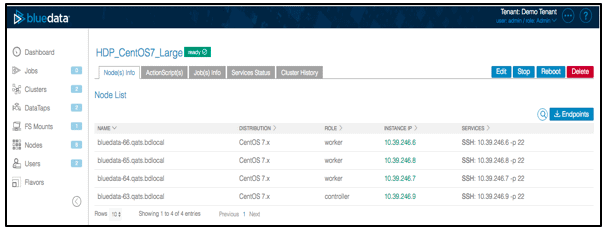

The screenshot below shows the HDP cluster environment that was tested, running on BlueData EPIC. The environment consisted of a four-node HDP cluster with 96 GB RAM, 10 cores, and 1 TB disk storage.

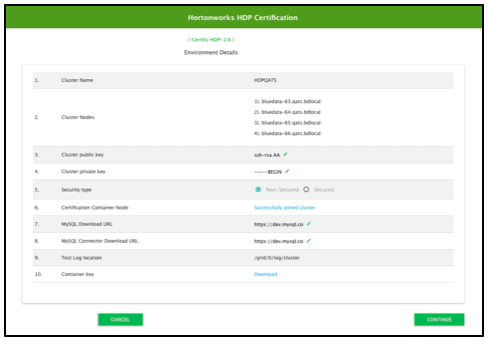

The QATS certification program runs on an Ambari node and the environment details in the screenshot below show configurations for database, log location, HDP cluster details, and other security considerations:

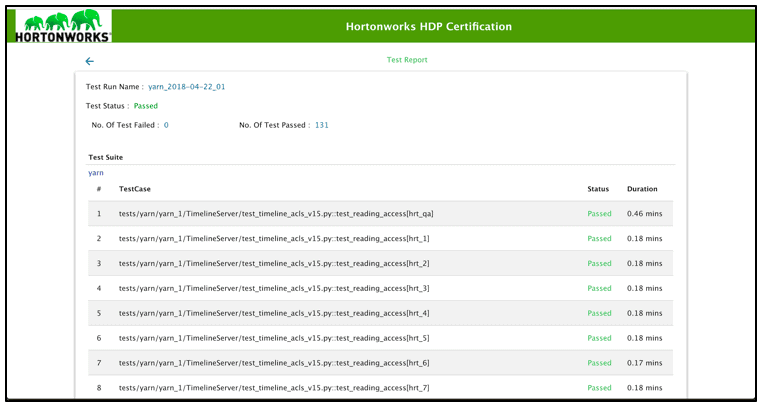

After validating each service, QATS results are shown with detailed information about the tests covered, status, and the duration of the test run. As an example, this screenshot provides high-level information about some of the YARN tests:

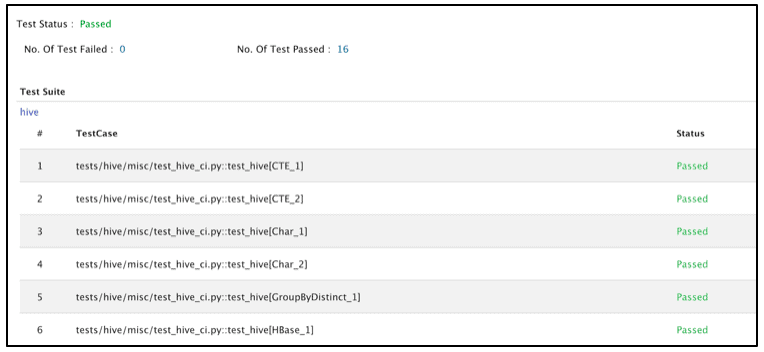

And this screenshot shows similar information for some of the Hive tests:

BlueData EPIC passed more than 4,000 tests across the full range of HDP services (e.g. HDFS, YARN, Hive with LLAP, Spark, Ranger, Atlas, and more) covering a comprehensive suite of use cases at high performance levels under rigorous loads. The tests covered 23 functional dependency points for HDP, and BlueData passed with a success rate of 100%.

As a result, BlueData has earned the valuable Hortonworks QATS certification badge. This deeper level of certification validates that our joint customers can run containerized HDP workloads on BlueData EPIC while ensuring complete interoperability and high performance. And the QATS program is an ongoing effort: we’ll continue to work with Hortonworks to ensure that new HDP versions, including new and extended services, are certified with BlueData EPIC.

BlueData-Hortonworks Collaboration

Through the QATS program and our continued partnership with Hortonworks, we can help enterprises to accelerate their Big Data deployments.

The traditional bare-metal architecture for on-premises deployments of Hadoop and other Big Data technologies can be complex to implement and expand. From the beginning, BlueData’s mission has been to fundamentally transform this deployment model with a new approach – leveraging the power of Docker containers.

Most enterprise organizations want a more agile, flexible, and elastic architecture for their Big Data infrastructure. And they may want to expand beyond Hadoop and Spark to other distributing computing frameworks for Big Data analytics as well as other data science, machine learning (ML), and deep learning (DL) tools for Artificial Intelligence (AI) use cases. They want a cloud-like experience (self-service, on-demand, elastic, and automated), but they also want enterprise-grade security and high performance. They want to accelerate their time-to-insights and time-to-value, while leveraging their existing infrastructure investments.

With BlueData EPIC, our customers can quickly spin up containerized compute clusters for the full range of HDP services – as well as other related analytics, data science, and ML / DL tools – to perform in-place analytics against their existing Hortonworks HDFS data lake. And they can achieve significantly lower total cost of ownership for their data infrastructure, while ensuring security and performance.

Ultimately, the BlueData-Hortonworks partnership helps these customers to deliver more innovation and greater business value from their Big Data deployments – with a cloud-like experience and secure multi-tenant architecture whether on-premises, in a private cloud, in the public cloud, in a hybrid cloud, or multiple clouds. Through deeper collaboration under the auspices of the QATS program, we will continue to work closely with Hortonworks to offer additional value-added solutions – helping our customers to drive successful business outcomes for their digital transformation initiatives.

To learn more about BlueData and the Hortonworks Data Platform (HDP), you can check out our Hortonworks partner microsite and read our joint solution brief: